Overview

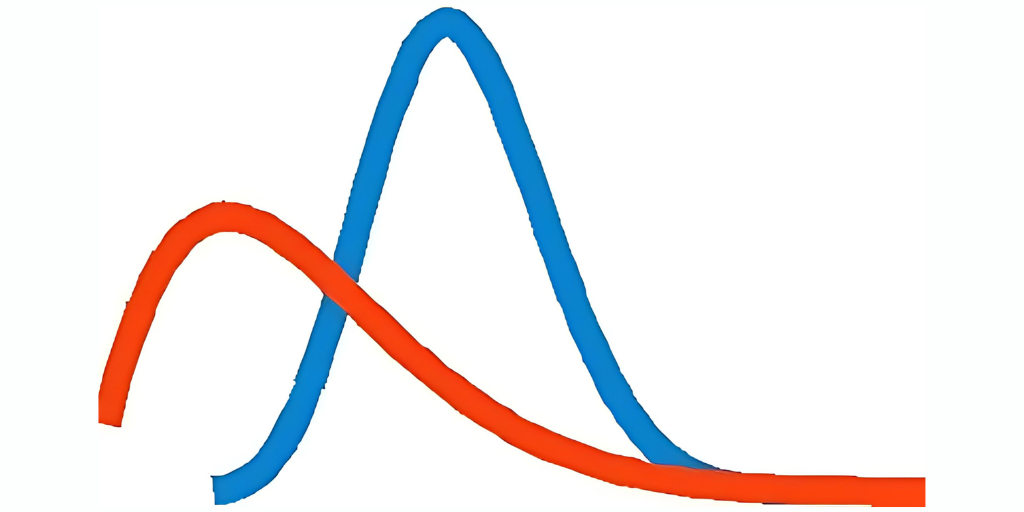

This course introduces the Bayesian approach to statistics, starting with the concept of probability and moving to the analysis of data. We will learn about the philosophy of the Bayesian approach as well as how to implement it for common types of data. We will compare the Bayesian approach to the more commonly-taught Frequentist approach, and see some of the benefits of the Bayesian approach. In particular, the Bayesian approach allows for better accounting of uncertainty, results that have more intuitive and interpretable meaning, and more explicit statements of assumptions. This course combines lecture videos, computer demonstrations, readings, exercises, and discussion boards to create an active learning experience. For computing, you have the choice of using Microsoft Excel or the open-source, freely available statistical package R, with equivalent content for both options. The lectures provide some of the basic mathematical development as well as explanations of philosophy and interpretation. Completion of this course will give you an understanding of the concepts of the Bayesian approach, understanding the key differences between Bayesian and Frequentist approaches, and the ability to do basic data analyses.

Syllabus

- Probability and Bayes’ Theorem

- In this module, we review the basics of probability and Bayes’ theorem. In Lesson 1, we introduce the different paradigms or definitions of probability and discuss why probability provides a coherent framework for dealing with uncertainty. In Lesson 2, we review the rules of conditional probability and introduce Bayes’ theorem. Lesson 3 reviews common probability distributions for discrete and continuous random variables.

- Statistical Inference

- This module introduces concepts of statistical inference from both frequentist and Bayesian perspectives. Lesson 4 takes the frequentist view, demonstrating maximum likelihood estimation and confidence intervals for binomial data. Lesson 5 introduces the fundamentals of Bayesian inference. Beginning with a binomial likelihood and prior probabilities for simple hypotheses, you will learn how to use Bayes’ theorem to update the prior with data to obtain posterior probabilities. This framework is extended with the continuous version of Bayes theorem to estimate continuous model parameters, and calculate posterior probabilities and credible intervals.

- Priors and Models for Discrete Data

- In this module, you will learn methods for selecting prior distributions and building models for discrete data. Lesson 6 introduces prior selection and predictive distributions as a means of evaluating priors. Lesson 7 demonstrates Bayesian analysis of Bernoulli data and introduces the computationally convenient concept of conjugate priors. Lesson 8 builds a conjugate model for Poisson data and discusses strategies for selection of prior hyperparameters.

- Models for Continuous Data

- This module covers conjugate and objective Bayesian analysis for continuous data. Lesson 9 presents the conjugate model for exponentially distributed data. Lesson 10 discusses models for normally distributed data, which play a central role in statistics. In Lesson 11, we return to prior selection and discuss ‘objective’ or ‘non-informative’ priors. Lesson 12 presents Bayesian linear regression with non-informative priors, which yield results comparable to those of classical regression.